The function of a robots.txt file of a website is to instruct the web crawlers and other web robots which pages of the website to crawl and also not to crawl.

The robots.txt file controls how search engine spiders see and interact with your webpages.

For example, if you don't want certain pages or images to be listed by Google and other search engines, you can block them using your robots.txt file.

Robots.txt File Important Information

This small text file may appear to be insignificant.

But the fact is, a robots.txt file is very important.

If you set up your robots.txt file wrongly, your blog or website may never even appear in the search engine results.

In other words, people cannot find your site when the go to Google search.

It is because this text file is telling or advising the search engines robots NOT to crawl your site at all!

Therefore, it’s vital that you understand the purpose of a robots.txt file in SEO and ensure you’re using it correctly.

Improper usage of the robots.txt file can hurt your search ranking. Make sure it is NOT blocking the important files.

How To Check Your Roots.txt File

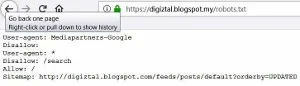

You can check whether you have a robots.txt file of your blog or website.

You just add /robots.txt, right after your site name in the address bar of your computer.

It looks something like this:

By the way, here is a free robots.txt checker by Website Planet which can help you to find mistakes due to typos, syntax, and "logic" errors.

It will also provide you other helpful optimization tips as well.

How To Create A basic Robots.txt File

If your site still do not have a robots.txt file, here is a guide how to create one.

1. Click to open your Notepad.

2. It will show a blank page.

3. Paste this code on the blank page.

User-agent: *

Allow: /

Sitemap:http://yoursitename.com/sitemap.xml

Note: Remember to change "yoursitename" to your actual site name.Allow: /

Sitemap:http://yoursitename.com/sitemap.xml

3. Click on "File", then click on "Save As...".

4. Name the file name as "robots.txt".

5. Then click the "Save" button.

You have just created a robots.txt for your site.

Then you need to upload this robots.txt file to your root directory.

Here is a how to create robots.txt for WordPress tutorial.

Add Custom Robots.txt For Blogger

Here is a guide on how to add a custom robots.txt to your Blogger blog.

1. Login to your Blogger blog.

2. Click on "Settings".

3. Next click on "Search preferences".

4. Scroll down until you see "Crawlers and indexing" section.

5. At "Custom robots.txt", click on "Edit".

6. Click on "Yes".

7. Now paste this robots.txt file code in the box provided.

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: http://yourblogname.blogspot.com/feeds/posts/default?orderby=UPDATED

Note: Remember to change "yourblogname" to your actual blog name, or URL. Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: http://yourblogname.blogspot.com/feeds/posts/default?orderby=UPDATED

8. Click on "Save Changes" button.

That's all.